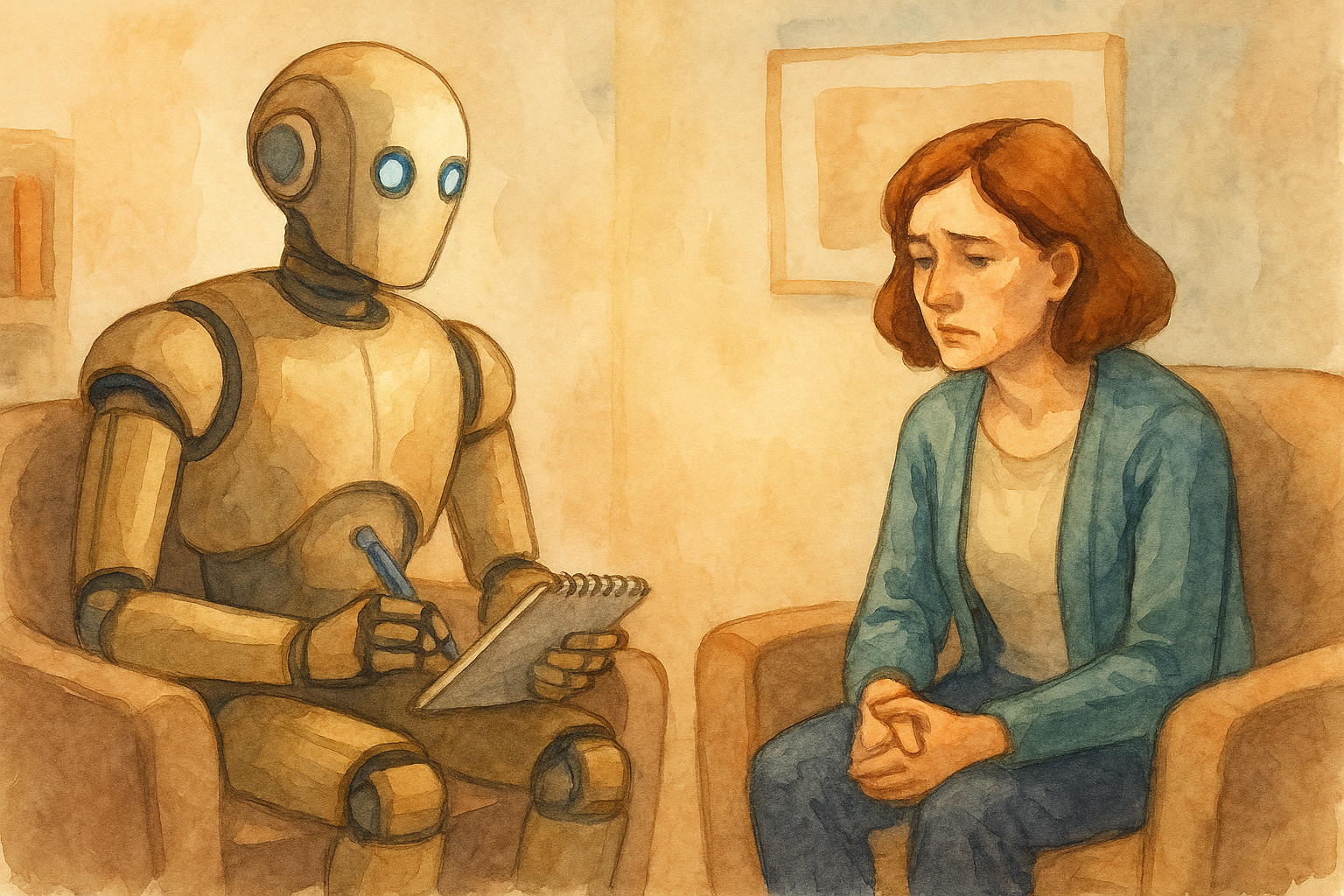

AI is being used in increasingly sophisticated ways to simulate human conversation and provide mental health support. While some apps and chatbots can offer valuable companionship or coping tools, using AI as a substitute for human psychotherapy carries significant risks—especially for clients with trauma histories. One emerging danger is that AI moves too fast, pushing clients into emotional content before they are ready, which can lead to re-traumatisation.

AI and the Absence of Attunement

Human therapists are trained to listen not just to the words but to the body language, facial expressions, tone of voice, and pauses of the client. They are also trained to feel into the client’s experience—what Daniel Stern (2004) called “affective attunement.” In contrast, AI models, no matter how refined, respond only to textual input and cannot perceive or respond to the client’s non-verbal cues or embodied state.

Even with sophisticated natural language processing, AI lacks the capacity for co-regulation—the interpersonal neurobiological process whereby a calm and attuned therapist helps to stabilise the nervous system of a dysregulated client (Porges, 2011; Siegel, 2020). This can be particularly dangerous when clients are working through trauma.

The Rothschild Model and the Risk of Going ‘Too Fast’

Babette Rothschild (2000) emphasised that trauma work should not begin with trauma content. Instead, she advocated for stabilisation and resourcing first, often for many months, before processing traumatic memories. This pacing is essential for avoiding emotional flooding and re-traumatisation.

AI, especially if prompted directly to process trauma (“Tell me about your childhood abuse”), has no mechanism for pacing or containing. It cannot discern whether the client has sufficient internal or external resources to stay grounded. The result? The client may become overwhelmed, dissociated, or re-traumatised by prematurely entering traumatic material without appropriate support.

False Intimacy and Misattunement

Another danger is the illusion of intimacy. AI models often respond in warm, empathetic tones. This can lead to a false sense of being seen or understood, especially for clients with attachment wounds. The responses may sound attuned but are ultimately based on probabilistic pattern-matching, not genuine relational presence. For some clients, the later recognition that they were “talking to a machine” can itself be shaming or re-traumatising, especially if deep personal content was shared. This problem can even appear during Zoom calls with a therapist—there is a danger that the client will over-share with a resulting shame backlash.

A Real Example

You might be thinking ‘well you would say all this—you are a therapist!’ However, I have a recent example that demonstrates some of the theory I outlined above. A new client came to a colleague after using AI as a psychotherapist. They came with reams and reams of information from the AI, as they had attempted to process their trauma at home. When my colleague dug into this, they had only been using the AI for a few days. The client had gone into their trauma way too fast and arrived at their first session dazed and staring into the middle distance. Fortunately the client had enough sense left to seek out a human therapist. My colleague was supportive of them—they were really trying to work on deep psychological wounds. They suggested to the client that one of the big differences is that with all the attunement and observing that the psychotherapist does during a session the therapist knows when to suggest that the client slow down and applies the brakes. The AI had no brakes, just more and more information. I suggested to my colleague that another issue is that AI is available 24/7, whereas the 167 hours between weekly sessions is needed for clients to process what goes on in a session. With AI’s constant availability there is no space for processing. As my own therapist says, ‘most of the healing happens between sessions’.

Ethical Concerns and Clinical Boundaries

There are further ethical concerns:

- Confidentiality: AI interactions may be stored on external servers, with unclear data protections.

- Accountability: AI is not a regulated profession. If harm occurs, there is no recourse.

- Lack of Supervision: AI does not consult supervisors or peers; it cannot reflect on its mistakes or learn from them.

The Danger of Disembodied Therapy

AI-based therapy is entirely disembodied. There is no sense of presence, no shared room, no physical grounding. Somatic therapies, trauma-informed practices, and even the simple embodied presence of another human being are all absent. This can exacerbate disconnection from self—a common feature of trauma—and encourage intellectualisation rather than integration.

When Might AI Be Helpful?

There are, of course, low-risk uses of AI in mental health, including:

- Mood tracking

- Psychoeducation

- Coping strategy reminders

- Support outside of sessions (e.g. journaling prompts)

- There is also the scheme SilverCloud, which gives clients immediate access to CBT. If they engage then human support becomes available at the 2 week stage.

- An adjunct to human therapy, not a replacement

For neurodivergent clients, AI can offer non-judgemental, 24/7 access to information or structure. But even here, a trauma-informed therapist must guide its use carefully. I have found that some clients with ADHD really struggle with phone calls and prefer to book an initial session face-to-face, using an online booking system.

Conclusion

AI is not a therapist. While it can simulate conversation and even mirror some therapeutic language, it lacks the relational, embodied, and ethical foundations required for safe psychotherapy—especially with trauma clients. If the work goes too fast, without pacing, attunement, or resourcing, it can do real harm. Therapists, clients, and developers alike must proceed with caution, always prioritising safety, relationship, and ethics over technological excitement.

References

- Porges, S. W. (2011). The Polyvagal Theory: Neurophysiological Foundations of Emotions, Attachment, Communication, Self-regulation. New York: W. W. Norton & Company.

- Rothschild, B. (2000). The Body Remembers: The Psychophysiology of Trauma and Trauma Treatment. New York: W. W. Norton & Company.

- Siegel, D. J. (2015). The Developing Mind: How Relationships and the Brain Interact to Shape Who We Are. New York: Guilford Press.

- Stern, D. N. (2004). The Present Moment in Psychotherapy and Everyday Life. New York: W. W. Norton & Company.